I’ve had the misfortune of becoming acquainted with the seedy, spammy world of affiliate marketing. This misfortune begat several web applications that generate modest amounts of revenue each month. Finding the total revenue is a chore because most marketers run campaigns from several different affiliate networks. When I want to see how much money I’m making, I don’t want to log in to three or four different sites.

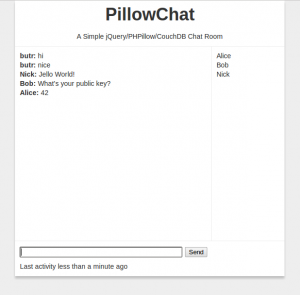

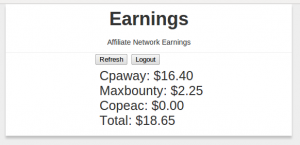

To mitigate this mild inconvenience, I built Earnings, an OO-PHP app designed to scrape daily affiliate network earnings from the myriad sites that owe you money. It looks like this:

Problem Description

Most affiliate networks don’t have APIs, so earnings data must be scraped from the HTML. Any scraper must get past the login screen and navigate to the earnings page to find the earnings data. This is problematic because the sites change often, breaking the login code and string parsing used to extract the data. Additionally, networks come and go. When there are no offers worth pursuing, marketers will have no need to view the earnings from that particular network. Furthermore, not all marketers work with the same networks. Any solution must make it easy to select which networks that will be scraped.

Solution Overview

This implementation takes advantage of object orientation in PHP5. The server performs the actual scraping in PHP classes that implement the abstract Network class. These classes are responsible for determining whether or not they have retrieved good data. If earnings data is unavailable due to bad credentials or a modified source site, a JSON-encoded error response is sent back to the client. Credentials for each affiliate network are stored server side in networks.json file.

The javascript client makes jQuery POST requests to authenticate with the server and get the data for the earnings list. Application state is maintained in the earnings_state global.

Limitations

I haven’t exposed any server-side methods to grab earnings from individual networks. The client can only grab the entire batch from the getEarnings javascript function. This is problematic because the calls are not multithreaded and it takes about 5 seconds to serially scrape earnings from 3 networks. Changing this wouldn’t be difficult. A little logic in earnings.php just needs to interface with the getEarnings PHP method of the Network class in question. The client could then call them all asynchronously.

Since all the earnings data lives scattered across the web on affiliate network sites and isn’t persisted on the server, it seems more efficient to cut out the PHP altogether and do everything client-side. Credentials could be saved in HTML5 localStorage. The major barrier is finding an elegant way to do cross-domain POST requests.

Finally, the getEarnings method has two date parameters to let the callee select a date range. These are ignored in the three Network classes I’ve written so far. They scrape earnings for the current day no matter what.

Contribute

Right now this app only supports CPAway.com, Copeac.com, and Maxbounty.com. I set it up so that it’s easy to add support for other networks in a modular fashion. To add your own network, do the following:

- Fork Earnings on GitHub

- Write a class that implements the abstract Network class. Look at Cpaway.php for an example of php curl use.

- Add an entry in networks.json

- Send me a pull request so we can have a comprehensive earnings scraping solution.